The Issue

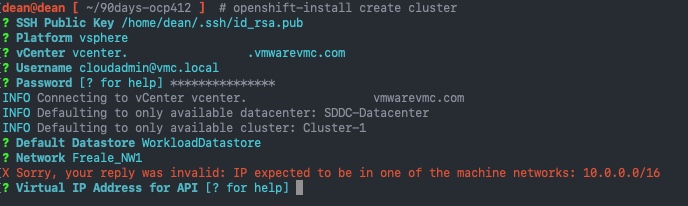

When running the command:

openshift-install create cluster

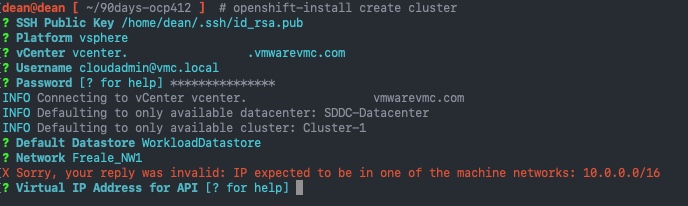

And you provide an API IP address which is not in the CIDR range 10.0.0.0/16, you recieve the below error.

INFO Defaulting to only available network: VM Network

X Sorry, your reply was invalid: IP expected to be in one of the machine networks: 10.0.0.0/16

? The VIP to be used for the OpenShift API.

The Cause

This is a known bug in the openshift-install tool (GitHub PR,Red Hat Article), where by the software installer is hardcoded to only accept addresses in the 10.0.0.0/16 range.

The Fix

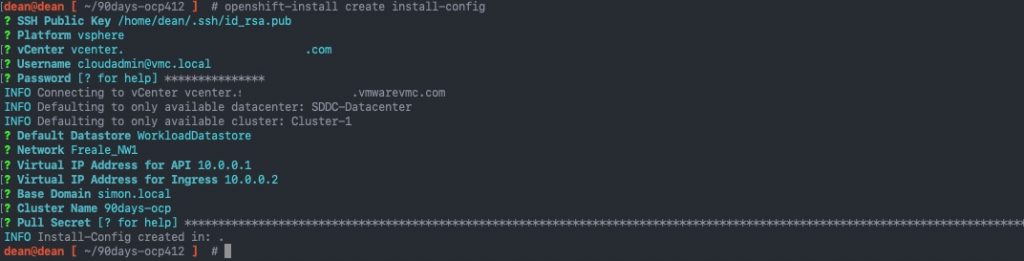

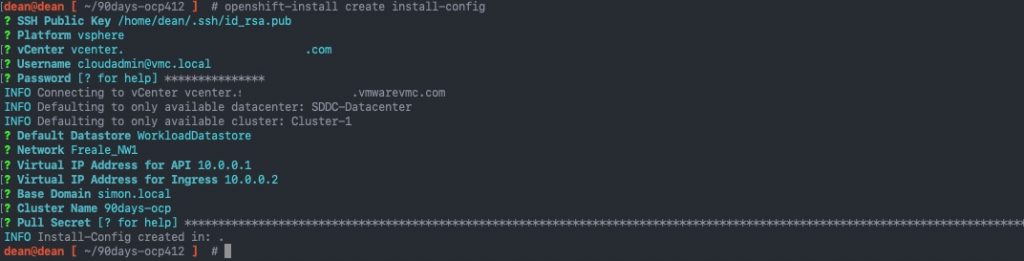

The current work around for this is to run openshift-install create install-config provide ip addresses in the 10.0.0.0/16 range, and then alter the install-config.yaml file manually before running openshift-install create cluster, which will read the available install-config.yaml file and create the cluster (rather than presenting you another wizard).

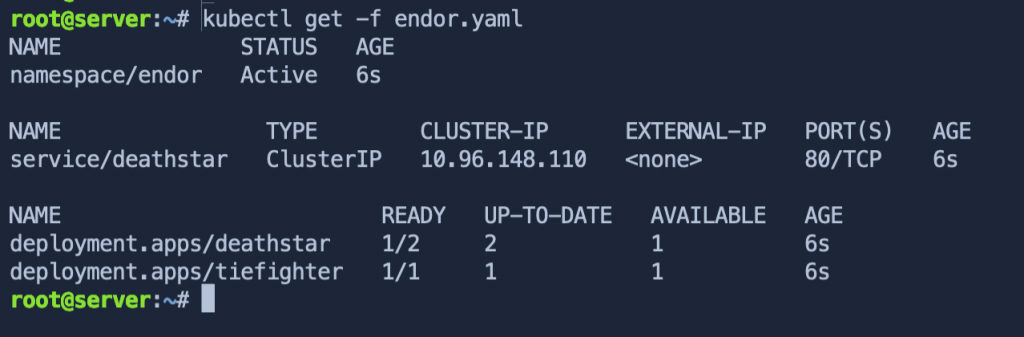

In the wizard (below screenshot), I’ve provided IP’s on the range from above, and set my base domain and cluster name as well. The final piece is to paste in my Pull Secret from the Red Hat Cloud console.

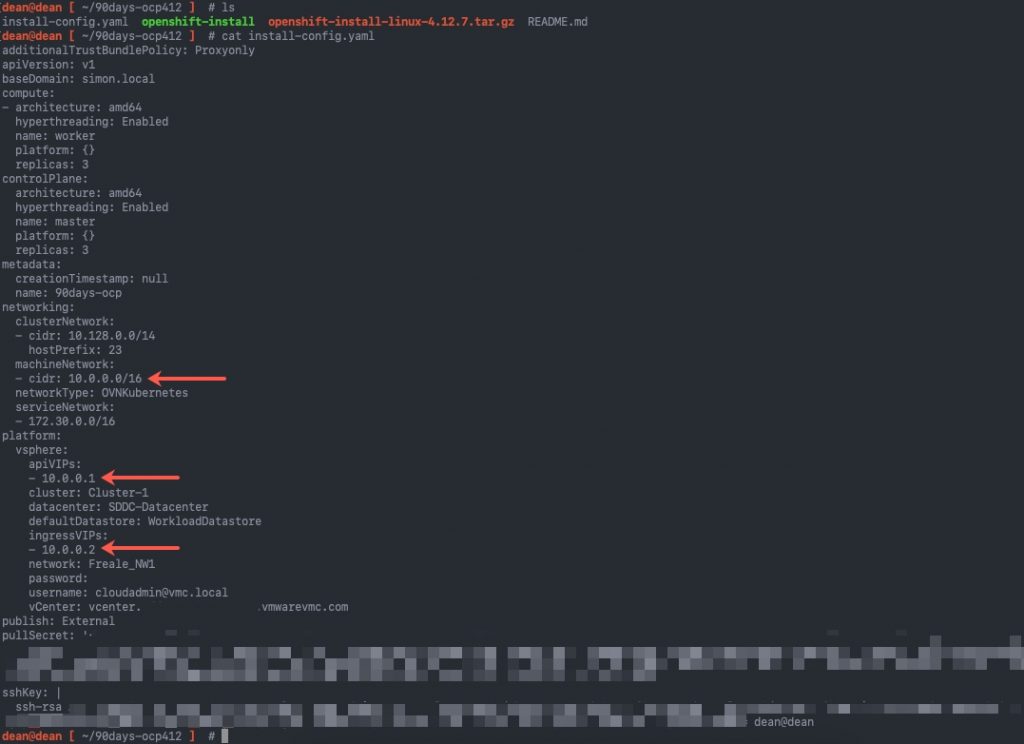

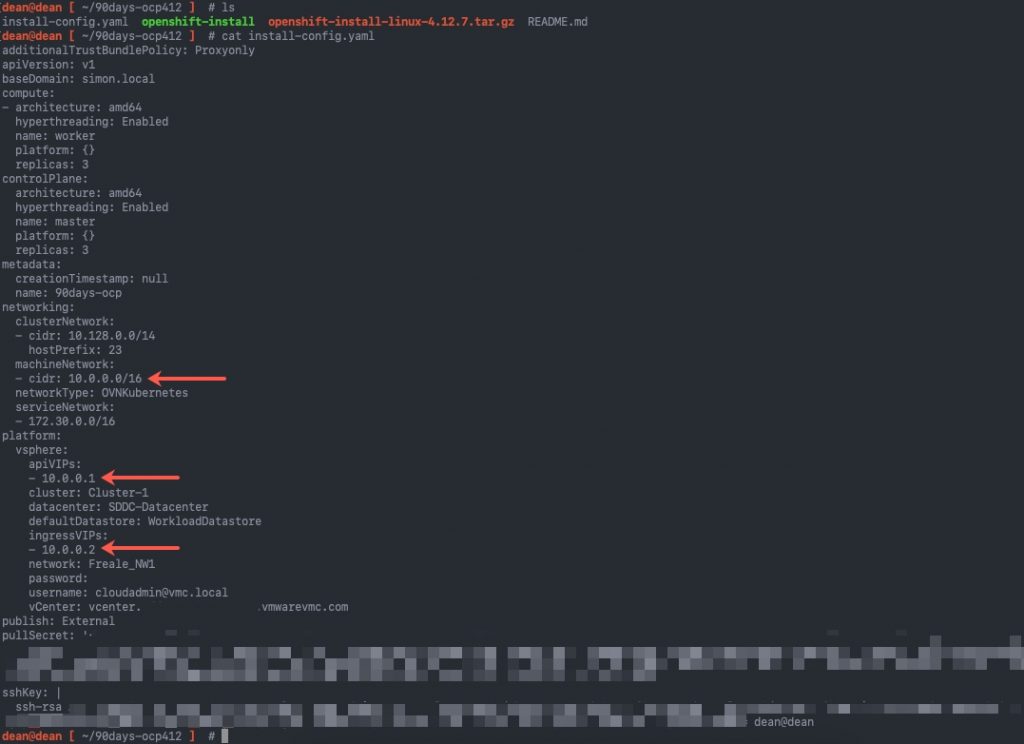

Now if I run ls on my current directory I’ll see the install-config.yaml file. It is recommended to save this file now before you run the create cluster command, as this file will be removed after this, as it contains plain text passwords.

I’ve highlighted in the below image the lines we need to alter.

For the section:

machineNetwork: - cidr: 10.0.0.0/16

This needs to be changed to the network subnet the nodes will run on. And for the platform section, you need to map the right IP addresses from your DNS records.

platform:

vsphere:

apiVIP: 192.168.200.192 <<<<<<< This is your api.{cluster_name}.{base_domain} DNS record

cluster: Cluster-1

folder: /vEducate-DC/vm/OpenShift/

datacenter: vEducate-DC

defaultDatastore: Datastore01

ingressVIP: 192.168.200.193 <<<<<<< This is your *.apps.{cluster_name}.{base_domain} DNS record

Now that we have our correctly configured install-config.yaml file, we can proceed with the installation of the cluster, which after running the openshift-install create cluster command, is hands off from this point forward. The system will output logging to the console for you, which you can modify using the --log-level= argument at the end of the command.

Regards

Dean Lewis